Open WebUI is a self-hosted, offline WebUI that supports various LLM runners like Ollama and OpenAI-compatible APIs. If you’re looking to set it up on your Mac, follow this guide.

Prerequisites

Before proceeding, ensure Docker is installed on your Mac. If not, follow this guide to get Docker up and running in just a few minutes.

If you need to install Ollama on your Mac before using Open WebUI, refer to this detailed step-by-step guide on installing Ollama.

Step 1: Pull the Open WebUI Docker Image

Open your terminal and run the following command to download and run the Open WebUI Docker image:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainThis command will initiate the download of the required Docker image layers, as shown in the terminal output in the image below. The process will download each layer, ensuring that everything is correctly set up.

As seen in the screenshot example, Docker pulls various layers of the Open WebUI image. The status indicators, like “Pull complete,” confirm the successful downloading and extraction of these layers.

Step 2: Access Open WebUI

After the download completes, Docker will automatically start the Open WebUI container. You can then access the interface by navigating to http://localhost:3000 in your web browser.

It will prompt you to log in. Rest assured, your data is stored locally on your device, ensuring complete privacy and security. No information is sent anywhere else

Step 3: Navigating the Open WebUI Dashboard

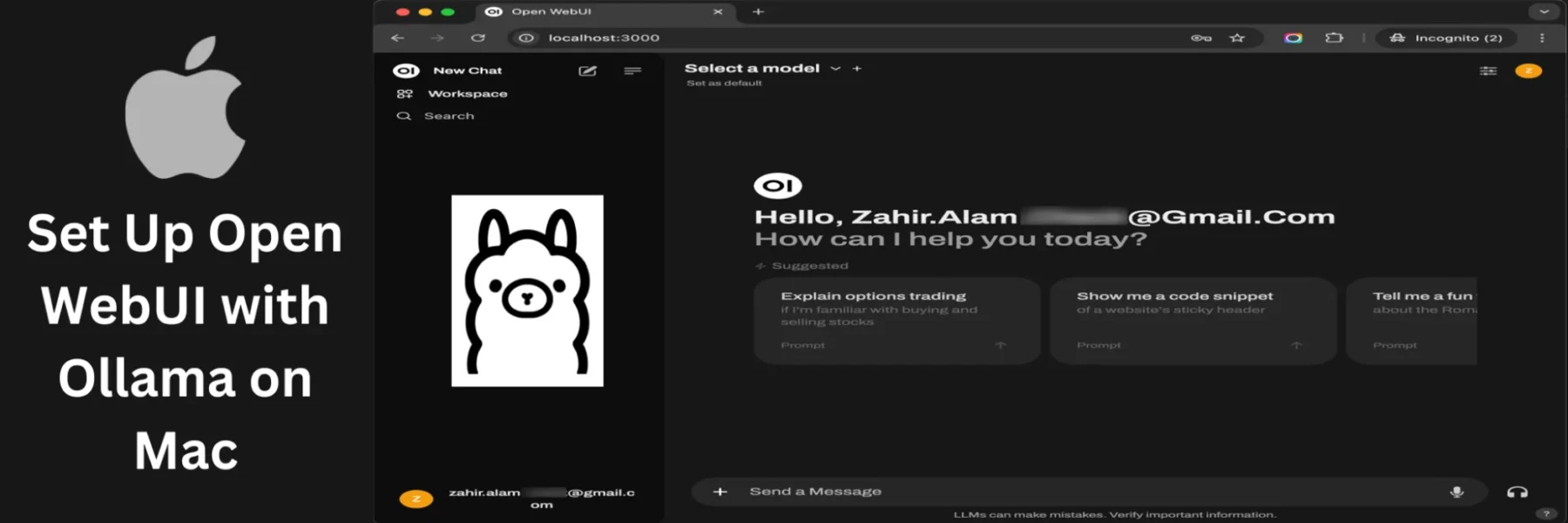

After successfully logging in, you’ll be greeted by the Open WebUI dashboard. This is where you’ll interact with the platform, accessing various models and tools.

The dashboard displays a welcome message with your email, and provides a selection of suggested actions or queries to help you get started. On the left sidebar, you can start a new chat, explore your workspace, or search for specific features.

At the top, you’ll see the “Select a model” option, allowing you to choose from available models to work with, tailoring your experience based on your needs.

Conclusion

And that’s it! You’ve successfully installed Open WebUI on your Mac. With Open WebUI up and running, you can now explore its powerful features for managing your LLM runners in an offline environment.

For any issues during installation, be sure to check the official Open WebUI documentation or reach out to the community for support. Happy exploring!