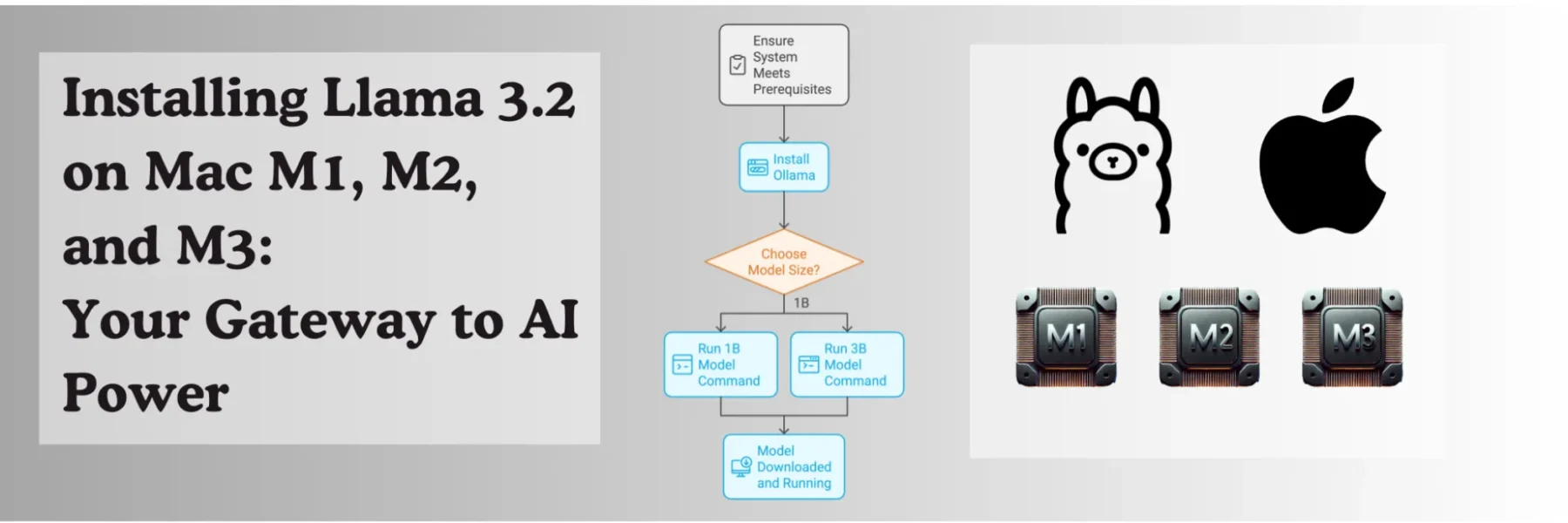

Llama 3.2 is the latest version of Meta’s powerful language model, now available in smaller sizes of 1B and 3B parameters. This makes it more accessible for local use on devices like Mac M1, M2, and M3. In this guide, we’ll walk you through the steps to install Llama 3.2 using Ollama.

Prerequisites

Before you begin, ensure your system meets the following requirements:

- Mac M1, M2, or M3 running macOS

- Sufficient disk space

- Stable internet connection

First, you’ll need to install Ollama, a powerful tool for running models like Llama on your Mac. For detailed instructions, follow our detailed guide on Step-by-Step Guide to Installing Ollama on Mac

Step 2: Download and Run the Llama 3.2 Model

Llama 3.2 is available in two sizes: 1B and 3B. Depending on your needs, choose one of the following commands to download and run the model:

For the 1B model:

ollama run llama3.2:1bFor the 3B model:

ollama run llama3.2This command will download the selected model and run it. If the model is already downloaded, the same command will simply run it without re-downloading.

Supported Languages

Llama 3.2 supports multiple languages, including:

- English

- German

- French

- Italian

- Portuguese

- Hindi

- Spanish

- Thai

It has been trained on a broad collection of languages, making it versatile for multilingual applications.

Use Cases

1B Model:

- Personal information management

- Multilingual knowledge retrieval

- Rewriting tasks running locally on edge devices

3B Model:

- Following instructions

- Summarization

- Prompt rewriting

- Tool use

Troubleshooting

If you encounter any issues during installation or while running the model, ensure that:

- Your system has sufficient resources.

- Your internet connection is stable.

- You’re using the latest version of Ollama.

For more detailed troubleshooting steps, refer to the Ollama documentation.

FAQ: Installing Llama 3.2 on Mac M1, M2, and M3

1. How can I install Llama 3.2 on my Mac?

Llama 3.2 can be installed on Mac M1, M2, or M3 using Ollama. Follow the steps outlined in the guide for detailed instructions on setting up Ollama and downloading the model.

2. Does Llama 3.2 work on macOS?

Yes, Llama 3.2 is compatible with macOS and supports Mac devices like the M1, M2, and M3 models. Ensure you have the latest macOS version for optimal performance.

3. Is it possible to install Llama 3.2 on a MacBook?

Yes, you can install Llama 3.2 on MacBooks equipped with M1, M2, or M3 chips using Ollama. The installation process is the same as on other Macs.

4. Where can I download Llama 3.2?

Llama 3.2 can be downloaded using Ollama. Use the command:

- For the 1B model:

ollama run llama3.2:1b - For the 3B model:

ollama run llama3.2

5. What are the system requirements for Llama 3.2 on a Mac?

The system requirements for Llama 3.2 include having a Mac with an M1, M2, or M3 chip, sufficient disk space, and a stable internet connection. Refer to the guide for detailed hardware specifications.

6. How do I check the hardware requirements for running Llama 3.2?

For the 1B and 3B models, ensure your Mac has adequate RAM and disk space. The 1B model requires fewer resources, making it ideal for lighter tasks. Check our guide for more information on minimum requirements.

7. How do I install Ollama on Mac M1, M2, or M3 for running Llama 3.2?

Ollama is essential for running Llama models on your Mac. Follow our step-by-step guide to install Ollama and configure it properly for Llama 3.2.

8. Can I run Llama 3.2 locally on my Mac?

Yes, you can run Llama 3.2 locally using Ollama. Once downloaded, the model runs on your Mac without needing a continuous internet connection.

9. What is the download size for Llama 3.2 models?

- The 1B model is approximately 1.3 GB, making it suitable for devices with limited storage space.

- The 3B model is around 2.0 GB, requiring more disk space but offering enhanced capabilities.

10. What languages are supported by Llama 3.2?

Llama 3.2 supports multiple languages, including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, making it versatile for multilingual applications.

11. What are the use cases for the 1B and 3B models?

- 1B Model: Suitable for basic tasks like information management and local knowledge retrieval on smaller devices.

- 3B Model: Ideal for advanced tasks such as instruction-following, summarization, and multilingual interactions.

12. How do I install Llama 3.2 specifically on Mac M1 or M3?

The installation process is the same for Mac M1 and M3. Ensure your Mac meets the minimum hardware requirements, and follow the steps in our guide using Ollama.

13. Is Llama 3.2 available for iOS or Android?

Llama 3.2 is primarily designed for Mac environments (M1, M2, and M3) and may not directly support iOS or Android installations. For mobile implementations, other solutions may be needed.

14. Can I run Llama 3.2 for iPhone?

Llama 3.2 is not optimized for direct use on iPhones. It is best run on Mac devices using Ollama.

15. What is the difference between Llama 3.2 1B and 3B models?

- 1B Model: Lower hardware requirements and quicker setup, ideal for lightweight tasks.

- 3B Model: Higher hardware requirements, better for complex AI applications and larger data handling.

16. How do I run Llama 3.2 using Ollama?

Use the following commands to run the model:

- For the 1B version:

ollama run llama3.2:1b - For the 3B version:

ollama run llama3.2

17. How to install Llama 3 on Mac?

To install Llama 3 (Llama 3.2 specifically) on Mac, follow the installation guide with Ollama. It covers both the setup and the steps needed to run the model.

18. Can Llama 3.2 run on Ubuntu or other platforms?

Yes, Llama 3.2 can be run on Ubuntu. For detailed instructions, check out our step-by-step guide on running Llama 3.2 onUbuntu 24.04. For other platforms, you may need to follow platform-specific configurations.

19. How do I check the model sizes for Llama 3.2?

To check the model sizes for Llama 3.2 using Ollama, follow these steps:

a. Open your terminal on your Mac (M1, M2, or M3).

b. Run the command to pull the specific Llama 3.2 model you want to check:

- For the 1B model, use:

ollama pull llama3.2:1b- For the 3B model, use:

ollama pull llama3.2:3bc. The terminal will show the download progress, including the total size of the model. For instance:

- The 1B model shows a size of approximately 1.3 GB.

- The 3B model shows a size of approximately 2.0 GB.

This procedure allows you to verify the model sizes directly before completing the download.

20. What are the supported devices for Llama 3.2?

Llama 3.2 supports Mac M1, M2, and M3 models for local use. Check the system requirements to ensure compatibility.

21. How do I install and configure Llama 3.2 on a MacBook using Ollama?

The installation is the same for MacBooks with M1, M2, or M3 chips. Ensure you have sufficient resources and follow the guide to set up Ollama and download the model.

22. What troubleshooting steps should I follow if Llama 3.2 fails to install?

Check that you have the latest version of Ollama and macOS updates. Ensure sufficient disk space and a stable internet connection. Refer to the Ollama documentation for more troubleshooting details.

23. Can Llama 3.2 be run on Mac without downloading it each time?

Yes, once the model is downloaded via Ollama, you can run it locally without needing to download it again.

24. How do I run Llama 3.2 for specific tasks like summarization or prompt rewriting?

The 3B model is optimized for advanced tasks such as summarization and prompt rewriting. Use the guide’s instructions to set it up and run commands for specific use cases.

25. Can Llama 3.2 run on other platforms, like Vision models?

Yes, for an enhanced AI model setup, check out our guide: Install Llama 3.2 Vision on Mac M1, M2, and M3.

Conclusion

Installing Llama 3.2 on Mac M1, M2, and M3 is straightforward with Ollama. By following the steps outlined above, you can have the model up and running in no time, enabling you to leverage its capabilities for your AI projects.