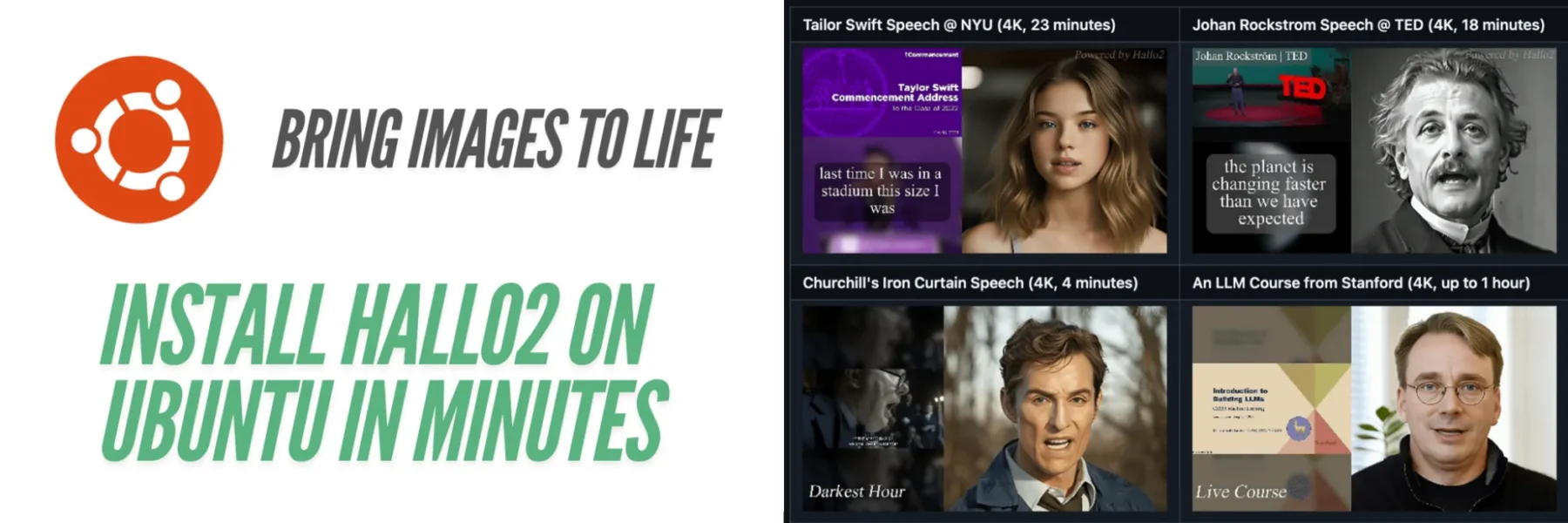

Hallo2 is a powerful framework designed for creating long-duration, high-resolution, audio-driven portrait image animations. It stands out for its ability to generate 4K videos for animations lasting up to or beyond 1 hour, making it a go-to tool for extended animations with stunning detail. This tutorial will guide you step-by-step through the process of installing and running Hallo2 on Ubuntu, so you can leverage its full potential for your AI animation projects.

Prerequisites

Before starting, ensure that you have:

- Operating System: Ubuntu 20.04 or Ubuntu 22.04

- CUDA: CUDA 11.8 (for GPU acceleration)

- GPU: Tested with NVIDIA A100 (other CUDA-enabled GPUs may also work)

- Python: Python 3.10 or higher

1. Clone the Hallo2 Repository

First, clone the Hallo2 repository from GitHub to obtain the necessary code, scripts, and configuration files.

# Clone the Hallo2 repository

git clone https://github.com/fudan-generative-vision/hallo2.git

# Navigate into the project directory

cd hallo2This step is essential as it provides all the scripts you will need to run Hallo2.

2. Install Git LFS

Git LFS (Large File Storage) is required to download large model files. Install it using the following commands:

# Install Git LFS

sudo apt-get install git-lfs

# Set up Git LFS

git lfs install3. Install Conda

If you don’t have Conda installed, you can install Miniconda to create and manage the Python environment. Follow these steps:

# Download Miniconda installer

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

# Run the installer

bash Miniconda3-latest-Linux-x86_64.sh

# Restart your terminalAfter installing Conda, create a Conda environment for Hallo2 with Python 3.10:

conda create -n hallo python=3.10

conda activate hallo4. Install Required Python Packages

With your Conda environment active, install the required Python packages. Start by installing PyTorch with CUDA 11.8 support:

pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 --index-url https://download.pytorch.org/whl/cu118Next, install the remaining dependencies by running:

pip install -r requirements.txtAlso, install FFmpeg, which is necessary for video processing:

sudo apt-get install ffmpeg5. Download Pretrained Models

Hallo2 requires pretrained models, which you can download from HuggingFace. Run the following commands to clone the pretrained models:

git clone https://huggingface.co/fudan-generative-ai/hallo2 pretrained_modelsAlternatively, you can download each pretrained model from their respective repositories. Make sure to organize them in the following directory structure:

./pretrained_models/

|-- audio_separator/

|-- CodeFormer/

|-- face_analysis/

|-- facelib

|-- hallo2

|-- motion_module/

|-- realesrgan

|-- sd-vae-ft-mse/

|-- stable-diffusion-v1-5/

|-- wav2vec/6. Edit the Configuration File

The next step is to configure the paths in the YAML file. The default configuration file (configs/inference/long.yaml) provides example paths for the source image and driving audio:

source_image: ./examples/reference_images/1.jpg

driving_audio: ./examples/driving_audios/1.wavYou can replace these with your own files by updating the source_image and driving_audio fields with the appropriate paths.

Make sure the paths for pretrained models are correct in the configuration, and check that the save_path is where you want to store the results. The default is:

save_path: ./output_long/debug/7. Running Inference

Now you are ready to run inference and generate animations based on your source image and driving audio.

Run the following command to start the animation generation process:

python scripts/inference_long.py --config ./configs/inference/long.yamlThis script will generate an animation based on the image and audio, and save the result to the location specified in the save_path.

8. (Optional) High-Resolution Video Upsampling

To further enhance the quality of the video, you can run the high-resolution upsampling script. This improves both the background and face areas in the video:

python scripts/video_sr.py --input_path [input_video] --output_path [output_dir] --bg_upsampler realesrgan --face_upsample -w 1 -s 4Replace [input_video] with the path to the generated video and specify the output directory in [output_dir]. This command will enhance the video and save the result in the specified folder.

9. Troubleshooting

Here are some common issues and their solutions:

- CUDA not found: Make sure CUDA 11.8 is installed correctly by running

nvcc --version. If not, follow a CUDA installation guide. - Pretrained model not found: Verify that all pretrained models are placed in the correct folders under

pretrained_models. - Slow inference: Ensure that you are using a CUDA-enabled GPU for faster inference. Running on a CPU will significantly slow down the process.

10. Hardware Considerations

To get the best performance out of Hallo2, ensure that you are using:

- GPU: A CUDA-compatible GPU is recommended for faster inference. The repository has been tested with NVIDIA A100, but other GPUs should work if they support CUDA.

- Memory: Depending on the model size and image resolution, your GPU should have at least 16GB of memory to run the models smoothly.

11. Viewing Results

Once inference is complete, the generated animation will be saved in the directory you specified in the configuration file. You can open and view the resulting video using a media player like VLC.

Conclusion

By following this tutorial, you should now be able to install, configure, and run Hallo2 on your Ubuntu system. With the pretrained models and scripts in place, you can generate high-quality animations using your own images and audio. For further customization, feel free to explore the configuration file and experiment with different settings.